The Only Sure Thing with AI Is Writing Will Get Blander and the Rich Will Get Richer

AI programs aren't about to replace novelists. But that doesn't mean they won't damage writers.

The release of OpenAI’s ChatGPT writing program has produced yet another round of AI art debates, with almost everyone either fearfully predicting or hopefully heralding the impending revolution of every field. (“ChatGPT Will End High-School English” says The Atlantic. “Will ChatGPT make lawyers obsolete? (Hint: be afraid)” warns Reuters. Etc.) But the greatest focus has been on art and writing. Are novelists about to be replaced with cheap AI? Or, as techno-optimists say, are we about to experience a new era in which AI assists humans to create new, better, and more interesting works of art?

Personally, I feel pretty confident that neither of these things are imminent. AI programs are not about to replace our great artists, and while they can assist us, it is probably in more limited ways than many imagine. (I’ve written about limitations of AI writing before. As a counterpoint, I interviewed Chandler Klang Smith about her collaborations with AI.) But that doesn’t mean everything is fine and dandy for artists in this era. The best bet, I believe, is the same thing that has happened everywhere will happen here: things will get blander and the rich will get richer.

ChatGPT Is Full of Shit

First, let’s talk about the limitations of AI (or really “AI”) at this stage. ChatGPT is an interesting program that certainly would blow the minds of someone from 50 years ago. Yet right now it is hardly the writer/artist/teacher/etc. replacement some claim. Fundamentally, ChatGPT is full of shit. It spits back generic text-stuff that takes the shape of textual forms with little regard for accuracy or content. John Warner noted that ChatGPT invented fake articles it claimed were real. Others pointed out it failed basic math puzzles, producing as Anil Dash joked “a computer that is bad at math.”

Basically, it’s a robot version of Bart Simpson making crap up for a report he didn’t research.

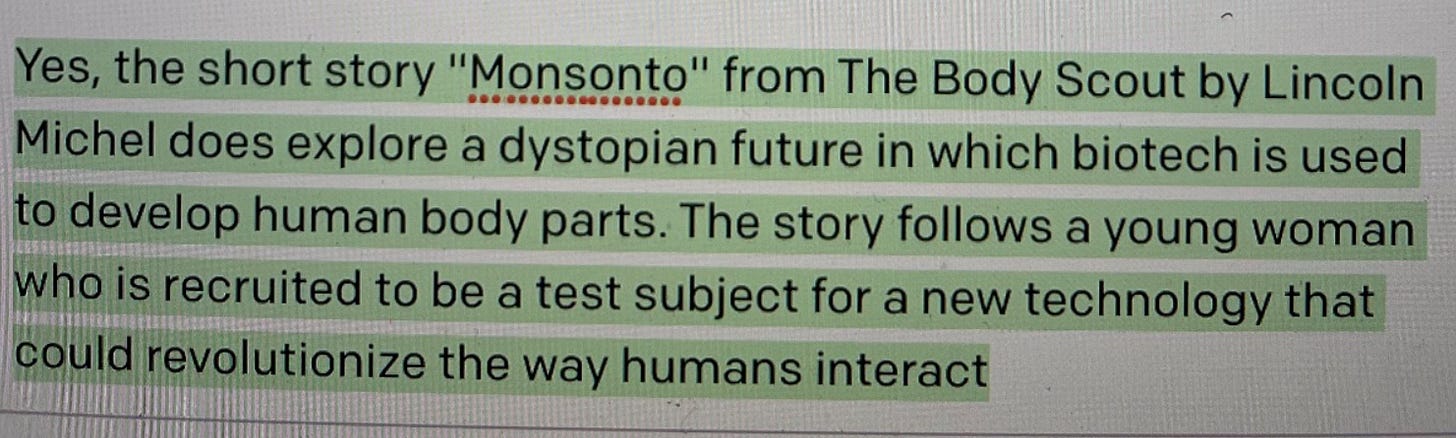

The Bart comparison seems apt as ChatGPT is lazy. Well, it’s not lazy really as it isn’t sentient, but it mimics the process of a lazy writer. Before I played in ChatGPT myself, a friend who knows I dislike AI art dm’d me a conversation about my novel, The Body Scout, which the program dubs a “collection of short stories” and then simply… invents an example story with a misspelled title?

When asked for similar books it also made stuff up:

Gigantic was a lit mag, not a book. The Collected Schizophrenias is an excellent essay collection by one of my novel’s blurbers, Esmé Weijun Wang, but not “in the same genre” as a science fiction novel. Meanwhile The Sun and Other Stars is a title I’d never heard of before but apparently is a tender coming-of-age novel set in the Italian Riviera that “tells the story of a widowed butcher and his son whose losses are transformed into love.” Perhaps great but, uh, not my vibe.

ChatGPT also is quite bad at following instructions. I gave it a fairly generic creative writing prompt to write about an old man sitting by a lake without mentioning the man or the lake. Its first sentence opened “The old man sat by the water’s edge.” Whoops. Worse, I asked for a six-word story about baby shoes and was given an eight-word store slogan:

A program that can’t follow simple instructions and makes up facts is unlikely to replace professors anytime soon. My above examples are humorous, but the problem is pretty serious for AI. A recent Wired article noted that LLM AIs have an extreme problem understanding negation, which means asking them for emergency medical advice—something that theoretical would be a great benefit of AI—easily backfires: “when a user searched how to handle a seizure, they received answers promoting things they should not do—including being told inappropriately to ‘hold the person down’ and ‘put something in the person’s mouth.’” Such advice could be deadly.

Even if we aren’t talking potential death, as long as AI routinely spits out errors and bullshit it is no useful replacement for engineers, doctors, professors, technical writers, or any other field where being correct matters.

What AI Can Replace and What It Can’t

Still, even if we can agree that AI programs mostly produce bland nonsense at this stage it is certainly possible they’ll get much better very quickly. Many of the problems listed or linked to above can likely be solved. So the question is: what can AI writing programs replace and what, if anything, can’t they?

I’ll present the quasi-optimistic half of my take first. I don’t think we are in any danger of AI writing programs replacing great novels or even, frankly, generic ones. Perhaps I’m a bit of a romantic in this one area, but I believe that humans really do care about the humanity of art. We go to art to see the the unique visions, ideas, and creations of other humans. Sure every now and then a duck who “paints” by walking on a canvas after its owner dips its feet in paint, but this is a goofy gimmick. No robust market for duck art or elephant art or dog art has emerged, much less replaced our interest in human painters.

I feel confident the same will be true for computer generated art. Yes, there will be a few gimmick books that get attention. Some pranksters might get AI poems accepted into lit mags. Etc. But books by ChatGPT are not going to replace Chekhov anymore than a gimmick like hologram Tupac—who appeared at Coachella over a decade ago—made touring by living musicians irrelevant.

There is a major caveat to add here, which is that the line between what counts as human-produced will get blurry. Computers already assist artists in many ways and it’s easy to imagine AI tools that would benefit authors greatly without compromising artistic integrity at all. For example, swapping a passage from first person POV to third person POV is—at least on the initial pass—a bunch of robotic work that a future AI program could do in an instant. As an author, I’d kill for that. And if I go to ChatGPT and ask it to spit a bunch of titles at me, they might spark ideas even if I use none… although my first attempt didn’t yield much of interest:

Yet the more involved AI is in the composition, the more the work starts to feel like it isn’t yours. Where the line is drawn from computer-assisted work to computer-composed work will be a major source of debate.

Even if you don’t agree that people who enjoy books care about the human intentions behind them and think readers will be just as happy with Anton ChatGPT-kov, nothing I’ve seen indicates AI programs are capable of writing coherent long-form text much less interesting ones. AIs like ChatGPT are programmed to spit back the most expected material possible. They’re a long-form version of the email autoresponse features that pop up “Sounds good” and “Great, thanks” buttons. Maybe they save you two seconds of typing, but there is no personality or poetry there.

At the New Yorker, Jay Caspian Kang tried to get ChatGPT to rewrite his novel and the result was an unfunny parody of a boring literary novel. In addition to being generic, ChatGPT and similar AI programs quickly descend into contradiction, nonsense, and gibberish the longer the text goes on. They can write a generic few paragraphs of bullshit, but they can’t—as far as I’ve seen—write a sustained and coherent story even at the level of formulaic ghost-written airport thriller level. You can prod them to create this material, but it takes a ton of work to do so so isn’t necessarily a timesaver.

Which leads to the more depressing side of my take: there’s not a clear economic benefit to replacing novelists with AI programs.

Writing Is (Sadly) Cheap

Bluntly, authors don’t cost a lot of money. There are exceptions who get million dollar deals, but those people get paid because they have a huge fanbase that AI writing programs do not. If we’re talking about your average novel, then we’re talking about about a pretty cheap cost to the publisher. Most of the expenses of publishing a novel aren’t the writing but the printing, retail mark-up, distribution, and so on. Your average debut literary novel might cost the publisher as little as $10,000 for the prose itself and for that they get a free publicist and marketer to boot. Authors accept this low pay because it’s their artistic creation and they get (one hopes) some non-monetary benefits from seeing their art in the world. Other professionals don’t work that way. The AIs we have now would need a rather massive amount of editing to become even generic books. This means you would have to hire AI editors to sculpt and shape the work, which might end up costing you more than the $10,000 advance. And to the degree that the unpaid author promo work—interviews, essays, social media posts on accounts they’ve built up over years—sells books then that has to be replaced with paid work too.

It’s possible to think of some exceptions. Right now, the James Patterson factory pumps out a dozen books a year by employing ghostwriters and co-writers who make decent money. Could they be replaced with AI programs + cheap AI editors? Maybe. Certainly I think the self-publishing world will be inundated with AI-vomited gibberish that just hopes to capitalize on search trends and fool readers (there’s already lots of plagiarized, web-scraped material in that world.) But for the books most people reading this newsletter care about, authors seem safe for now.

The (Even More) Depressing Half of This Take

So the above is the optimistic-ish side of what I see, but that doesn’t mean I think things will turn out great for writers. Because even if artistic novels seem safe (for now), many other forms of writing will be easily replaced. We’ve already had AI programs producing sports “stories” from box scores and it’s easy to imagine lots of other simplistic and formulaic writing that AI can replace. Low-quality news recaps. Listicles. Plenty of publicity emails and social media posts. The general online content churn that makes up most writing on the web. These can all be replaced with AI programs either now or in the near future.

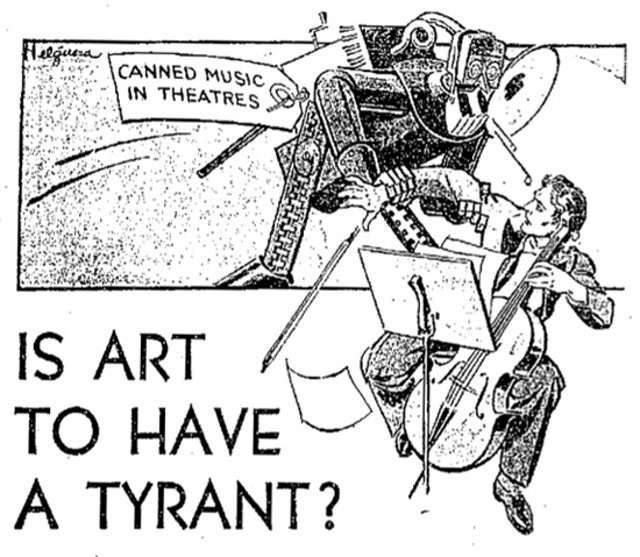

The problem is not so much that there’s anything sacred about fifty websites all quickly pumping out a 200-word post about a new Marvel announcement. Replacing those with AI writing will make those already very dull and generic posts even blander, but it hardly seems worth weeping over by itself. Similarly, one can easily imagine websites and magazines employing AI art generators for header images and spot illustrations. This also will make everything just a bit blander, but doesn’t seem like an apocalyptic scenario.

The problem is that those types of writing (and art) are both training grounds and income streams that allow many artists to create the art that you do care about. Excluding the independently wealthy, artists need income. And cobbling together freelance gigs loosely related to your field is a natural way to earn money. I used to write a dozen plus pieces a month for 50 to 500 bucks apiece to make rent. Many of this text added little value to humanity—I’m under no delusions there. An editor might call me up and say, “[Famous author] is adapting [famous novel] for TV. Here’s the Deadline link. Can you get me 150 words in an hour?” And while I quickly spat out some text, a couple dozen other writers were doing the same thing at a couple dozen other websites.

These posts were hardly poetic, but they paid my bills. And they taught me valuable skills about writing to deadline, writing in a publication’s style, copyediting, proofreading, and so on. I could name countless writers who followed a similar path. One of my best friends once told me the best thing he ever did for his writing wasn’t getting an MFA or reading great books, but getting a gig writing two 200-500 word blog posts per day, forcing him to learn how to quickly generate ideas and coherent prose.

If you aren’t a freelance writer or artist, maybe this means nothing to you. Still, I’d think about the algorithmic writing you already encounter on a daily basis. The generic auto-response text. The spam bots. AI-generated ads. Computer sports recaps. This text is above all boring. Our eyes gloss over it. It’s disposable, like so much else in our modern world of fast fashion that falls apart in weeks and produce that tastes like paper. Late-stage capitalism cares about quantity over quality every time. Words are the same as widgets in this regard.

So in the very near future, expect a whole lot more of this dull-as-dirt text and generic images. Meanwhile, artists will have fewer places to earn money and practice their skills and the rich tech bros who already own almost everything will get richer and richer.

If you want to support a non-robot author, please consider subscribing to this newsletter or checking out my science fiction novel The Body Scout, which The New York Times called “Timeless and original…a wild ride, sad and funny, surreal and intelligent” and Boing Boing declared “a modern cyberpunk masterpiece.”

"Humans care about the humanity of art."

I put it in similar words recently myself. This was a lovely read, thanks!

Very interesting...I recently saw a copywriter on LinkedIn making fun of the OpenAI Chatbot. He'd asked it to come up with names for a brand and slogans - shit he has to do on the regular for companies that pay him to come up with interesting copy. It came up with very generic names and then when he asked it to rhyme it failed to do that, though it got rather salty with him. Maybe it wasn't a real interaction - maybe he'd staged it - but it was hilarious.

About 10 years ago, I wrote a play based on what's now taking place. I'm not surprised by it but in another 10 years, we may want to revisit this because I suspect it may be much more capable of taking over then.