The Library of Blather

A.I. writing programs promise to make the internet unusable. Maybe that's a good thing?

“For every rational line or forthright statement there are leagues of senseless cacophony, verbal nonsense, and incoherency.” — Jorge Luis Borges

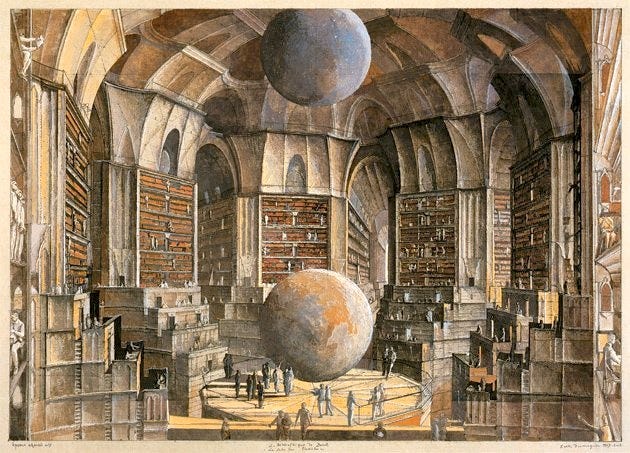

In “The Library of Babel,” Borges imagines an infinite library filled with every possible combination of text. Every work of genius must be found in this library, somewhere, but most of the rooms are filled with mere gibberish. Nonsense phrases repeated for entire books. Letters mashed together that don’t form words. Finding real knowledge is nearly impossible. The residents, robbed of meaning, turn to mysticism, depression, and suicide.

Although the story was published in 1941, it’s easy to read “The Library of Babel” as a metaphor for the internet. Here, a seemingly infinite amount of text exists and often pure gibberish thanks to attempts to evade spam filters and catch random keyword searches. Already, one of the fundamental problems of the internet is the sheer volume of text. How does one wade through this impossibly vast ocean—searching through fake news, spam, hoaxes, jokes, and idiocy—to find any island of meaning or truth?

It’s into this environment of near infinite unnavigable text that the tech world has introduced its latest (supposed) revolution: “A.I.” writing programs whose chief function is to spew out never-ending streams of verbiage.

The Library of Blather

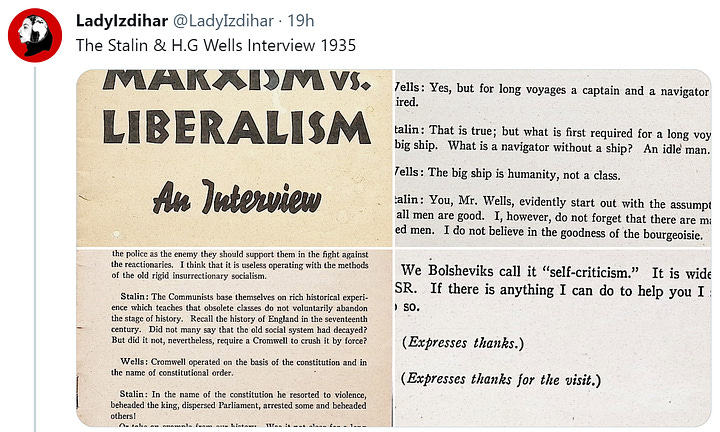

If you read this newsletter, you know I’ve been thinking (or perhaps more accurately grumbling) a lot about ChatGPT and other LLM (large language model) programs that the tech world is trying to brand as “artificial intelligence.” I’m hardly the only one thinking about them. You can barely go a day without seeing an article about how chatbots will either radically transform or ruin every single aspect of life. I won’t rehash the arguments here except to say that so far the attempts to use chatbots and AI writing programs have been embarrassing… at least if you care about accuracy. The Grayzone tried to use chatbots for reporting and ended up with completely fake “sources.” CNET tried to outsource writing articles to chatbots and ended up with articles riddled with basic errors. Etc. New stories of AI misinformation and scams crop up daily, and will certainly only continue. Just this week I saw an 80k account (aka not a complete rando) “fact check” someone who posted a historical interview between H.G. Wells and Stalin because ChatGPT told him it didn’t happen.

Even if future chatbots are capable of producing accurate information on request, they will also be capable of producing false information when directed to. And either way, they are capable of producing an infinite stream of text that we will have to handle. So what happens when the internet becomes the Library of Babel?

Or, actually, something worse. In Borges’s library, most books are filled with pure nonsense, but that’s something you can spot quickly. With the coming of chatbots, we’re looking at a torrent of text that always seems real even if it is filled with lies, errors, incoherencies, and misinformation. And that will seem human even when it isn’t. Each text in this library is suspect, but impossible to evaluate. Less nonsense than rubbish.

Let’s call this the Library of Blather.

A.I. Uses Cases Imply Their Own Uselessness

An odd thing I’ve noticed about the chatbot hype is that almost every use case works for select individuals but backfires with mass adaptation. People have started using chatbots to hack dating apps by doing all flirting work for you. Google just demoed “A.I.” for their workspace apps, boasting about how workers could let chatbots respond to email chains the worker doesn’t have time to read. And of course last month there was a lot of discourse about fiction writers who “don’t have time” to write using chatbots to script their story ideas.

Each of these uses cases, and many similar ones, could work in theory if you’re one of a few daters/workers/writers using “A.I.” You get the benefit of infinite text production, giving you a leg up over your competition. Yet all them are pointless if everyone is producing infinite text. If everyone is using chatbots to talk on Tinder, then individuals have no advantage and indeed the whole enterprise starts to seem pointless. Robots talking to robots. The text gives you no way to winnow down potential dates. You might as well go back to just judging everyone on pictures (crossing your fingers those aren’t bots and/or A.I. generated images) or simply deleting the apps altogether.

If everyone in the office is avoiding reading emails and letting chatbots reply, then no one is learning anything and the volume of work emails is likely to increase. Who ever wanted more office emails? Who ever thought longer work emails would solve anything? Even if all you’re doing is opening emails, skimming, and running a chat program, an dramatic increase in email volume wouldn’t lessen your workload. We already waste so much of our precious time handling emails we don’t even read—unsubscribing from newsletters, deleting promotional emails, etc.

As for fiction writing, well, magazines and publishers already get far more submissions than they can possibly handle. The self-publishing world is an ocean of mostly garbage that no one reads. An individual author might gain an edge over the competition if they can pump out 1,000 stories or 1,000 novels in a month I guess. Maybe. But if a bunch of people are doing this then the already overwhelming supply of fiction just gets larger without the number of readers increasing at all. What’s the endgame? Building readbots that will scan writebots’ novels, with no humans involved?

Poisons and Antidotes

The dream of the chatbot companies is to sell the poison and the antidote.

A bizarre, if telling, example is Google’s A.I. demo video that showed a video of one office worker asking the A.I. to summarize emails they didn’t have time to read followed by an example of an office worker using A.I. to generate an email they didn’t have time to write. What point do such emails serve—written and read by no one—except to make Google money?

If Tinder is filled with bot messages, then people can pay an “A.I.” to filter them away. If magazines are overwhelmed with ChatGPT stories, then pay for a ChatGPT screener to reject submissions for you. Then, of course, you sell Premium ChatGPT to people who want a leg up to get around the filters, and then you sell Ultra NoBot Defense programs to the daters and magazines. So on and so forth. A never-ending “A.I.” arms race where everyone pays and no one (except the shareholders) benefit.

As an economic model, this might work.

And for most of us the strange thing is that this possibility might mean… nothing much changes. As John Herrman in NY Mag noted: “Social-media communication is already substantially mediated by AI, and its example (platforms that address their own content glut with AI-powered algorithmic feeds) contains some suggestions about how this stuff might play out elsewhere or at least how it might feel.” We already have email systems that try to filter out the real emails you want from not just spam but also “promotions” and “updates” you don’t want to see. Social media companies already use algorithms to hide bots and spammers. So perhaps the A.I. revolution will just shift how this is all handled and produce nothing except even more money for a few tech companies.

Will the Flood of A.I. Text Break the Internet?

But I’m trying to be more hopeful these days, so let me propose an alternate ending. Let’s assume the torrent of “A.I.” text can’t be contained. Maybe these chatbots break the internet.

Not the entire internet of course, but the current stage social media stage of the internet. Maybe chatbots—by making all of the worst parts of the current era even worse—will just break it?

Even before ChatGPT and Dall-E, this stage of the internet felt like it was on its last legs. I don’t even need to detail the problems. Bots and trolls clogging up social media. Fake news websites. Global internet pile-ons of random strangers. Clickbait. Cambridge Analytica. Etc. Already, many people have been weaning themselves off social media and spending more time in private or semi-private spaces. Many of us now spend more and more time in Discords, Slacks, or, you know, reading Substacks than we do on Facebook or Twitter.

And every single problem of the social media age is exacerbated by “A.I.” Chatbots and image generators only make trolling, spamming, and disinformation campaigns even easier to do. It can only get worse from here.

So maybe we all wander around the Library of Blather and… just leave. When social media sites are filled with nothing except bots, spam, and trolls, we log off. When Google search is clogged with ChatGPT articles, we stop searching the whole internet altogether. I’m not predicting any kind of utopia or return to an idyllic state of nature. We won’t log off entirely. But the internet might increasingly look like a version of what it was before the social media age. A return to separate and selective communities. Reading trustworthy sources of information instead of whatever Uncle Bob posts from PatriotTruthLibertyNews.net. Closer communities. Deeper conversations.

And in other ways we may indeed log off. Many professors are talking about returning to in-class tests and papers, to remove the possibility of chatbot cheating. Literary magazines are debating returns to paper submissions, which would not prevent AI submissions but would cut down on them dramatically. If you have to pay for stamps on your chatbot stories, suddenly writing a thousand of them in a day doesn’t feel like a winning proposition. I’m not saying that chatbots breaking the social media internet will be a purely good thing. I’m saying what else can we do? Wander the ever-expanding Library of Blather forever trying to find the one human among a million bots?

Useful A.I. Uses

I should probably wrap up here by saying LLMs and other “A.I.” programs will certainly have their uses. It’s easy to think of many potential benefits. But most of them—more accurate Google translate, better word processing grammar checks, cleaner automatic transcriptions of audio recordings—are merely improvements on existing functions. Hardly the stuff of total revolution “A.I.” boosters claim. “AI is one of the most profound things we're working on as humanity,” Google (Alphabet) CEO Sundar Pichai said at a recent World Economic Forum meeting. “It's more profound than fire or electricity.” You can’t sell that with slightly better grammar checks.

Yet for whatever reasons the current crop of “A.I.” companies are focused not on the useful functions of honing or winnowing text. They want to produce more and more and more of it until we’re buried in the rubbish.

If you like this newsletter, consider subscribing or checking out my recent science fiction novel The Body Scout that The New York Times called “Timeless and original…a wild ride, sad and funny, surreal and intelligent.”

Other works I’ve written or co-edited include Upright Beasts (my story collection), Tiny Nightmares (an anthology of horror fiction), and Tiny Crimes (an anthology of crime fiction).

One funny/sad thing that I know might happen goes like this:

A decade ago I wrote a couple Zombie stories to express myself. Noone read them…

So as an efficient machine AI wont scrape these stories, it wont find the pen-name anywhere on the internet, so it’ll just say, “I’ll just repost them verbatim and change the names” and noone will know, of course unless it becomes a great AI novel licensed by Netflix and then I bring out the receipts…

Well there will be problems and there will be solutions. Proof -of-human is already a thing in Blockchain space. If dating apps get inundated with chatbots new dating apps will evolve emphasizng real in person interactions.

If llm can write good enough fiction by themselves and stable diffusion good enough art. Well so these things become abundant. I see no problem with that.

We don't have GAIs yet. When we do things might be different ( as humans themselves might become obsolete). But with current crop of tools? Llm is just another one in the stack.